Release notes

This page provides the release notes for Video Calling.

Video SDK

If your target platform is Android 12 or higher, add the android.permission.BLUETOOTH_CONNECT permission to the AndroidManifest.xml file of the Android project to enable the Bluetooth function of the Android system.

v4.2.2

v4.2.2 was released on july 27, 2023.

New features

-

Wildcard token

This release introduces wildcard tokens. Agora supports setting the channel name used for generating a token as a wildcard character. The token generated can be used to join any channel if you use the same user id. In scenarios involving multiple channels, such as switching between different channels, using a wildcard token can avoid repeated application of tokens every time users joining a new channel, which reduces the pressure on your token server. See Secure authentication with tokens.

All 4.x SDKs support using wildcard tokens. -

Preloading channels

This release adds

preloadChannel[1/2]andpreloadChannel[2/2]methods, which allows a user whose role is set as audience to preload channels before joining one. Calling the method can help shortening the time of joining a channel, thus reducing the time it takes for audience members to hear and see the host.When preloading more than one channels, Agora recommends that you use a wildcard token for preloading to avoid repeated application of tokens every time you joining a new channel, thus saving the time for switching between channels. See Secure authentication with tokens.

-

Customized background color of video canvas

In this release, the

backgroundColormember has been added toVideoCanvas, which allows you to customize the background color of the video canvas when setting the properties of local or remote video display.

Improvements

-

Improved camera capture effect

Since this release, camera exposure adjustment is supported. This release adds

isCameraExposureSupportedto query whether the device supports exposure adjustment andsetCameraExposureFactorto set the exposure ratio of the camera. -

Virtual Background Algorithm Upgrade

This version has upgraded the portrait segmentation algorithm of the virtual background, which comprehensively improves the accuracy of portrait segmentation, the smoothness of the portrait edge with the virtual background, and the fit of the edge when the person moves. In addition, it optimizes the precision of the person's edge in scenarios such as meetings, offices, homes, and under backlight or weak light conditions.

-

Channel media relay

The number of target channels for media relay has been increased to 6. When calling

startOrUpdateChannelMediaRelayandstartOrUpdateChannelMediaRelayEx, you can specify up to 6 target channels. -

Enhancement in video codec query capability

To improve the video codec query capability, this release adds the

codecLevelsmember inCodecCapInfo. After successfully callingqueryCodecCapability, you can obtain the hardware and software decoding capability levels of the device for H.264 and H.265 video formats throughcodecLevels.

This release includes the following additional improvements:

- To improve the switching experience between multiple audio routes, this release adds the

setRouteInCommunicationModemethod. This method can switch the audio route from a Bluetooth headphone to the earpiece, wired headphone or speaker in communication volume mode (MODE_IN_COMMUNICATION). - The SDK automatically adjusts the frame rate of the sending end based on the screen sharing scenario. Especially in document sharing scenarios, this feature avoids exceeding the expected video bitrate on the sending end to improve transmission efficiency and reduce network burden.

- To help users understand the reasons for more types of remote video state changes, the

REMOTE_VIDEO_STATE_REASON_CODEC_NOT_SUPPORTenumeration has been added to theonRemoteVideoStateChangedcallback, indicating that the local video decoder does not support decoding the received remote video stream.

Issues fixed

This release fixed the following issues:

- Slow channel reconnection after the connection was interrupted due to network reasons.

- In screen sharing scenarios, the delay of seeing the shared screen was occasionally higher than expected on some devices.

- In custom video capturing scenarios,

setBeautyEffectOptions,setLowlightEnhanceOptions,setVideoDenoiserOptions, andsetColorEnhanceOptionscould not load extensions automatically.

API changes

Added

setCameraExposureFactorisCameraExposureSupportedpreloadChannel[1/2]preloadChannel[2/2]updatePreloadChannelTokensetRouteInCommunicationModeCodecCapLevelsVideoCodecCapLevelbackgroundColorinVideoCanvascodecLevelsinCodecCapInfoREMOTE_VIDEO_STATE_REASON_CODEC_NOT_SUPPORT

v4.2.1

This version was released on June 21, 2023.

Improvements

This version improves the network transmission strategy, enhancing the smoothness of audio and video interactions.

Issues fixed

This version fixed the following issues:

- Inability to join channels caused by SDK's incompatibility with some older versions of AccessToken.

- After the sending end called

setAINSModeto activate AI noise reduction, occasional echo was observed by the receiving end. - Brief noise occurred while playing media files using the media player.

- In screen sharing scenarios, some Android devices experienced choppy video on the receiving end.

v4.2.0

v4.2.0 was released on May 24, 2023.

Compatibility changes

If you use the features mentioned in this section, ensure that you modify the implementation of the relevant features after upgrading the SDK.

1. Video data acquisition

The onCaptureVideoFrame and onPreEncodeVideoFrame callbacks are added with a new parameter called sourceType, which is used to indicate the specific video source type.

2. Channel media options

publishCustomAudioTrackEnableAecinChannelMediaOptionsis deleted. UsepublishCustomAudioTrackinstead.publishTrancodedVideoTrackinChannelMediaOptionsis renamed topublishTranscodedVideoTrack.publishCustomAudioSourceIdinChannelMediaOptionsis renamed topublishCustomAudioTrackId.

3. Miscellaneous

onApiCallExecutedis deleted. Agora recommends getting the results of the API implementation through relevant channels and media callbacks.enableDualStreamMode[1/2] andenableDualStreamMode[2/2] are deprecated. UsesetDualStreamMode[1/2] andsetDualStreamMode[2/2] instead.startChannelMediaRelay,updateChannelMediaRelay,startChannelMediaRelayEx, andupdateChannelMediaRelayExare deprecated. UsestartOrUpdateChannelMediaRelayandstartOrUpdateChannelMediaRelayExinstead.

New features

1. AI Noise Suppression

This release introduces public APIs for the AI Noise Suppression function. Once enabled, the SDK automatically detects and reduces background noises. Whether in bustling public venues or real-time competitive arenas that demand lightning-fast responsiveness, this function guarantees optimal audio clarity, providing users with an elevated audio experience. You can enable this function through the newly-introduced setAINSMode method and set the noise suppression mode to balance, aggressive, or low latency according to your scenarios.

2. Enhanced Virtual Background

To increase the fun of real-time video calls and protect user privacy, this version has enhanced the Virtual Background function. You can now set custom backgrounds of various types by calling the enableVirtualBackground method, including:

- Process the background as alpha information without replacement, only separating the portrait and the background. This can be combined with the local video mixing feature to achieve a portrait-in-picture effect.

- Replace the background with various formats of local videos.

See Virtual Background documentation.

3. Video scenario settings

This release introduces setVideoScenario for setting the video application scene. The SDK will automatically enable the best practice strategy based on different scenes, adjusting key performance indicators to optimize video quality and improve user experience. Whether it is a formal business meeting or a casual online gathering, this feature ensures that the video quality meets the requirements.

Currently, this feature provides targeted optimizations for real-time video conferencing scenarios, including:

- Automatically activate multiple anti-weak-network technologies to enhance the capability and performance of low-quality video streams in meeting scenarios where high bitrate is required, ensuring smoothness when multiple streams are subscribed by the receiving end.

- Monitor the number of subscribers for the high-quality and low-quality video streams in real time, dynamically adjusting the configuration of the high-quality stream and dynamically enabling or disabling the low-quality stream, to save uplink bandwidth and consumption.

4. Local video mixing

This release adds the local video mixing feature. You can use the startLocalVideoTranscoder method to mix and render multiple video streams locally, such as camera-captured video, screen sharing streams, video files, images, etc. This allows you to achieve custom layouts and effects, making it easy to create personalized video display effects to meet various scenario requirements, such as remote meetings, live streaming, online education, while also supporting features like portrait-in-picture effect.

Additionally, the SDK provides the updateLocalTranscoderConfiguration method and the onLocalVideoTranscoderError callback. After enabling local video mixing, you can use the updateLocalTranscoderConfiguration method to update the video mixing configuration. Where an error occurs in starting the local video mixing or updating the configuration, you can get the reason for the failure through the onLocalVideoTranscoderError callback.

5. Cross-device synchronization

In real-time collaborative singing scenarios, network issues can cause inconsistencies in the downlinks of different client devices. To address this, this release introduces getNtpWallTimeInMs for obtaining the current Network Time Protocol (NTP) time. By using this method to synchronize lyrics and music across multiple client devices, users can achieve synchronized singing and lyrics progression, resulting in a better collaborative experience.

Improvements

1. Improved voice changer

This release introduces the setLocalVoiceFormant method that allows you to adjust the formant ratio to change the timbre of the voice. This method can be used together with the setLocalVoicePitch method to adjust the pitch and timbre of voice at the same time, enabling a wider range of voice transformation effects.

2. Enhanced screen share

This release adds the queryScreenCaptureCapability method, which is used to query the screen capture capabilities of the current device. To ensure optimal screen sharing performance, particularly in enabling high frame rates like 60 fps, Agora recommends you to query the device's maximum supported frame rate using this method beforehand.

This release also adds the setScreenCaptureScenario method, which is used to set the scenario type for screen sharing. The SDK automatically adjusts the smoothness and clarity of the shared screen based on the scenario type you set.

3. Improved compatibility with audio file types

As of v4.2.0, you can use the following methods to open files with a URI starting with content://:

startAudioMixing[2/2]playEffect[3/3]open[2/2]openWithMediaSource

4. Audio and video synchronization

For custom video and audio capture scenarios, this release introduces getCurrentMonotonicTimeInMs for obtaining the current Monotonic Time. By passing this value into the timestamps of audio and video frames, developers can accurately control the timing of their audio and video streams, ensuring proper synchronization.

5. Multi-camera capture

This release introduces startCameraCapture. By calling this method multiple times and specifying the sourceType parameter, developers can start capturing video streams from multiple cameras for local video mixing or multi-channel publishing. This is particularly useful for scenarios such as remote medical care and online education, where multiple cameras need to be connected.

6. Channel media relay

This release introduces startOrUpdateChannleMediaRelay and startOrUpdateChannleMediaRelayEx, allowing for a simpler and smoother way to start and update media relay across channels. With these methods, developers can easily start the media relay across channels and update the target channels for media relay with a single method. Additionally, the internal interaction frequency has been optimized, effectively reducing latency in function calls.

7. Custom audio tracks

To better meet the needs of custom audio capture scenarios, this release adds createCustomAudioTrack and destroyCustomAudioTrack for creating and destroying custom audio tracks. Two types of audio tracks are also provided for users to choose from, further improving the flexibility of capturing external audio source:

- Mixable audio track: Supports mixing multiple external audio sources and publishing them to the same channel, suitable for multi-channel audio capture scenarios.

- Direct audio track: Only supports publishing one external audio source to a single channel, suitable for low-latency audio capture scenarios.

Issues fixed

This release fixed the following issues:

- Occasional crashes occurred on Android devices when users joined or left a channel.

- When the host frequently switched the user role between broadcaster and audience in a short period of time, the audience members could not hear the audio of the host.

- Occasional failure when enabling in-ear monitoring.

- Occasional echo.

- Abnormal client status caused by an exception in the

onRemoteAudioStateChangedcallback.

API changes

Added

startCameraCapturestopCameraCapturestartOrUpdateChannelMediaRelaystartOrUpdateChannelMediaRelayExgetNtpWallTimeInMssetVideoScenariogetCurrentMonotonicTimeInMsstartLocalVideoTranscoderupdateLocalTranscoderConfigurationonLocalVideoTranscoderErrorqueryScreenCaptureCapabilitysetScreenCaptureScenariosetAINSModecreateAudioCustomTrackdestroyAudioCustomTrackAudioTrackConfigAudioTrackTypeVideoScenario- The

mDomainLimitandmAutoRegisterAgoraExtensionsmembers inRtcEngineConfig - The

sourceTypeparameter inonCaptureVideoFrameandonPreEncodeVideoFramecallbacks BACKGROUND_NONE(0)BACKGROUND_VIDEO(4)

Deprecated

enableDualStreamMode[1/2]enableDualStreamMode[2/2]startChannelMediaRelaystartChannelMediaRelayExupdateChannelMediaRelayupdateChannelMediaRelayExonChannelMediaRelayEvent

Deleted

onApiCallExecutedpublishCustomAudioTrackEnableAecinChannelMediaOptionsinChannelMediaOptions

v4.1.1

v4.1.1 was released on February 8, 2023.

Compatibility changes

As of this release, the SDK optimizes the video encoder algorithm and upgrades the default video encoding resolution from 640 × 360 to 960 × 540 to accommodate improvements in device performance and network bandwidth, providing users with a full-link HD experience in various audio and video interaction scenarios.

Call the setVideoEncoderConfiguration method to set the expected video encoding resolution in the video encoding parameters configuration.

New features

1. Instant frame rendering

This release adds the enableInstantMediaRendering method to enable instant rendering mode for audio and video frames, which can speed up the first video or audio frame rendering after the user joins the channel.

2. Video rendering tracing

This release adds the startMediaRenderingTracing and startMediaRenderingTracingEx methods. The SDK starts tracing the rendering status of the video frames in the channel from the moment this method is called and reports information about the event through the onVideoRenderingTracingResult callback.

Agora recommends that you use this method in conjunction with the UI settings, such as buttons and sliders, in your app. For example, call this method when the user clicks Join Channel and then get the indicators in the video frame rendering process through the onVideoRenderingTracingResult callback.

This enables developers to optimize the indicators and improve the user experience.

Improvements

1. Video frame observer

As of this release, the SDK optimizes the onRenderVideoFrame callback, and the meaning of the return value is different depending on the video processing mode:

- When the video processing mode is

PROCESS_MODE_READ_ONLY, the return value is reserved for future use. - When the video processing mode is

PROCESS_MODE_READ_WRITE, the SDK receives the video frame when the return value istrue. The video frame is discarded when the return value isfalse.

2. Super resolution

This release improves the performance of super resolution. To optimize the usability of super resolution, this

release removes enableRemoteSuperResolution. Super resolution is now included in the online strategies of video quality enhancement which does not require extra configuration.

Issues fixed

This release fixes the following issues:

- Playing audio files with a sample rate of 48 kHz failed.

- Crashes occurred after users set the video resolution as 3840 × 2160 and started CDN streaming on Xiaomi Redmi 9A devices.

- In real-time chorus scenarios, remote users heard noises and echoes when an OPPO R11 device joined the channel in loudspeaker mode.

- When the playback of the local music finished, the

onAudioMixingFinishedcallback was not properly triggered. - When using a video frame observer, the first video frame was occasionally missed on the receiver's end.

- When sharing screens in scenarios involving multiple channels, remote users occasionally saw black screens.

- Switching to the rear camera with the virtual background enabled occasionally caused the background to be inverted.

- When there were multiple video streams in a channel, calling some video enhancement APIs occasionally failed.

- At the moment when a user left a channel, a request for leaving was not sent to the server and the leaving behavior was incorrectly determined by the server as timed out.

API changes

Added

enableInstantMediaRenderingstartMediaRenderingTracingstartMediaRenderingTracingExonVideoRenderingTracingResultMEDIA_RENDER_TRACE_EVENTVideoRenderingTracingInfo

Deleted

enableRemoteSuperResolutionsuperResolutionTypeinRemoteVideoStats

v4.1.0

v4.1.0 was released on December 15, 2022.

New features

1. Headphone equalization effect

This release adds the setHeadphoneEQParameters method, which is used to adjust the low- and high-frequency parameters of the headphone EQ. This is mainly useful in spatial audio scenarios. If you cannot achieve the expected headphone EQ effect after calling setHeadphoneEQPreset, you can call setHeadphoneEQParameters to adjust the EQ.

2. Encoded video frame observer

This release adds the setRemoteVideoSubscriptionOptions and setRemoteVideoSubscriptionOptionsEx methods. When you call the registerVideoEncodedFrameObserver method to register a video frame observer for the encoded video frames, the SDK subscribes to the encoded video frames by default. If you want to change the subscription options, you can call these new methods to set them.

For more information about registering video observers and subscription options, see the API reference.

3. MPUDP (MultiPath UDP) (Beta)

As of this release, the SDK supports MPUDP protocol, which enables you to connect and use multiple paths to maximize the use of channel resources based on the UDP protocol. You can use different physical NICs on both mobile and desktop and aggregate them to effectively combat network jitter and improve transmission quality.

To enable this feature, contact support@agora.io.

4. Camera capture options

This release adds the followEncodeDimensionRatio member in CameraCapturerConfiguration, which enables you to set whether to follow the video aspect ratio already set in setVideoEncoderConfiguration when capturing video with the camera.

5. Multi-channel management

This release adds a series of multi-channel related methods that you can call to manage audio and video streams in multi-channel scenarios.

- The

muteLocalAudioStreamExandmuteLocalVideoStreamExmethods are used to cancel or resume publishing a local audio or video stream, respectively. - The

muteAllRemoteAudioStreamsExandmuteAllRemoteVideoStreamsExare used to cancel or resume the subscription of all remote users to audio or video streams, respectively. - The

startRtmpStreamWithoutTranscodingEx,startRtmpStreamWithTranscodingEx,updateRtmpTranscodingEx, andstopRtmpStreamExmethods are used to implement Media Push in multi-channel scenarios. - The

startChannelMediaRelayEx,updateChannelMediaRelayEx,pauseAllChannelMediaRelayEx,resumeAllChannelMediaRelayEx, andstopChannelMediaRelayExmethods are used to relay media streams across channels in multi-channel scenarios. - Adds the

leaveChannelEx[2/2] method. Compared with theleaveChannelEx[1/2] method, a newoptionsparameter is added, which is used to choose whether to stop recording with the microphone when leaving a channel in a multi-channel scenario.

6. Video encoding preferences

In general scenarios, the default video encoding configuration meets most requirements. For certain specific scenarios, this release adds the advanceOptions member in VideoEncoderConfiguration for advanced settings of video encoding properties:

compressionPreference: The compression preferences for video encoding, which is used to select low-latency or high-quality video preferences.encodingPreference: The video encoder preference, which is used to select adaptive preference, software encoder preference, or hardware encoder video preferences.

7. Client role switching

In order to enable users to know whether the switched user role is low-latency or ultra-low-latency, this release adds the newRoleOptions parameter to the onClientRoleChanged callback. The value of this parameter is as follows:

AUDIENCE_LATENCY_LEVEL_LOW_LATENCY(1): Low latency.AUDIENCE_LATENCY_LEVEL_ULTRA_LOW_LATENCY(2): Ultra-low latency.

8. Brand-new AI Noise Suppression

The SDK supports a new version of Noise Suppression (in comparison to the basic Noise Suppression in v3.7.x). The new AI Noise Suppression has better vocal fidelity, cleaner noise suppression, and adds a dereverberation option. To enable this feature, contact support@agora.io.

9. Spatial audio effect

This release adds the following features applicable to spatial audio effect scenarios, which can effectively enhance the user's sense of presence experience in virtual interactive scenarios.

- Sound insulation area: You can set a sound insulation area and sound attenuation parameter by calling

setZones. When the sound source (which can be a user or the media player) and the listener belong to the inside and outside of the sound insulation area, the listner experiences an attenuation effect similar to that of the sound in the real environment when it encounters a building partition. You can also set the sound attenuation parameter for the media player and the user, respectively, by callingsetPlayerAttenuationandsetRemoteAudioAttenuation, and specify whether to use that setting to force an override of the sound attenuation paramter insetZones. - Doppler sound: You can enable Doppler sound by setting the

enable_dopplerparameter inSpatialAudioParams, and the receiver experiences noticeable tonal changes in the event of a high-speed relative displacement between the source source and receiver (such as in a racing game scenario). - Headphone equalizer: You can use a preset headphone equalization effect by calling the

setHeadphoneEQPresetmethod to improve the hearing of the headphones.

Improvements

1. Bluetooth permissions

To simplify integration, as of this release, you can use the SDK to enable Android users to use Bluetooth normally without adding the BLUETOOTH_CONNECT permission.

2. CDN streaming

To improve user experience during CDN streaming, when your camera does not support the video resolution you set when streaming, the SDK automatically adjusts the resolution to the closest value that is supported by your camera and has the same aspect ratio as the original video resolution you set. The actual video resolution used by the SDK for streaming can be obtained through the onDirectCdnStreamingStats callback.

3. Relaying media streams across channels

This release optimizes the updateChannelMediaRelay method as follows:

- Before v4.1.0: If the target channel update fails due to internal reasons in the server, the SDK returns the error code

RELAY_EVENT_PACKET_UPDATE_DEST_CHANNEL_REFUSED(8), and you need to call theupdateChannelMediaRelaymethod again. - v4.1.0 and later: If the target channel update fails due to internal server reasons, the SDK retries the update until the target channel update is successful.

4. Reconstructed AIAEC algorithm

This release reconstructs the AEC algorithm based on the AI method. Compared with the traditional AEC algorithm, the new algorithm can preserve the complete, clear, and smooth near-end vocals under poor echo-to-signal conditions, significantly improving the system's echo cancellation and dual-talk performance. This gives users a more comfortable call and live-broadcast experience. AIAEC is suitable for conference calls, chats, karaoke, and other scenarios.

5. Virtual background

This release optimizes the virtual background algorithm. Improvements include the following:

- The boundaries of virtual backgrounds are handled in a more nuanced way and image matting is is now extremely thin.

- The stability of the virtual background is improved whether the portrait is still or moving, effectively eliminating the problem of background flickering and exceeding the range of the picture.

- More application scenarios are now supported, and a user obtains a good virtual background effect day or night, indoors or out.

- A larger variety of postures are now recognized, when half the body is motionless, the body is shaking, the hands are swinging, or there is fine finger movement. This helps to achieve a good virtual background effect in conjunction with many different gestures.

Other improvements

This release includes the following additional improvements:

- Reduces the latency when pushing external audio sources.

- Improves the performance of echo cancellation when using the

AUDIO_SCENARIO_MEETINGscenario. - Improves the smoothness of SDK video rendering.

- Enhances the ability to identify different network protocol stacks and improves the SDK's access capabilities in multiple-operator network scenarios.

Issues fixed

This release fixed the following issues:

- Audience members heard buzzing noises when the host switched between speakers and earphones during live streaming.

- The call

getExtensionPropertyfailed and returned an empty string. - When entering a live streaming room that has been played for a long time as an audience, the time for the first frame to be rendered was shortened.

API changes

Added

-

setHeadphoneEQParameters -

setRemoteVideoSubscriptionOptions -

setRemoteVideoSubscriptionOptionsEx -

VideoSubscriptionOptions -

leaveChannelEx[2/2] -

muteLocalAudioStreamEx -

muteLocalVideoStreamEx -

muteAllRemoteAudioStreamsEx -

muteAllRemoteVideoStreamsEx -

startRtmpStreamWithoutTranscodingEx -

startRtmpStreamWithTranscodingEx -

updateRtmpTranscodingEx -

stopRtmpStreamEx -

startChannelMediaRelayEx -

updateChannelMediaRelayEx -

pauseAllChannelMediaRelayEx -

resumeAllChannelMediaRelayEx -

stopChannelMediaRelayEx -

followEncodeDimensionRatioinCameraCapturerConfiguration -

hwEncoderAcceleratinginLocalVideoStats -

advanceOptionsinVideoEncoderConfiguration -

newRoleOptionsinonClientRoleChanged -

adjustUserPlaybackSignalVolumeEx -

IAgoraMusicContentCenterinterface class and methods in it -

IAgoraMusicPlayerinterface class and methods in it -

IMusicContentCenterEventHandlerinterface class and callbacks in it -

Musicclass -

MusicChartInfoclass -

MusicContentCenterConfigurationclass -

MvPropertyclass -

ClimaxSegmentclass

Deprecated

onApiCallExecuted. Use the callbacks triggered by specific methods instead.

Deleted

- Removes deprecated member parameters

backgroundImageandwatermarkinLiveTranscodingclass. - Removes

RELAY_EVENT_PACKET_UPDATE_DEST_CHANNEL_REFUSED(8) inonChannelMediaRelayEventcallback.

v4.0.1

v4.0.1 was released on September 29, 2022.

Compatibility changes

This release deletes the sourceType parameter in enableDualStreamMode [3/3] and enableDualStreamModeEx, and the enableDualStreamMode [2/3] method, because the SDK supports enabling dual-stream mode for various video sources captured by custom capture or SDK, you don't need to specify the video source type any more.

New features

1. In-ear monitoring

This release adds getEarMonitoringAudioParams callback to set the audio data format of the in-ear monitoring. You can use your own audio effect processing module to pre-process the audio frame data of the in-ear monitoring to implement custom audio effects. After calling registerAudioFrameObserver to register the audio observer, set the audio data format in the return value of the getEarMonitoringAudioParams callback. The SDK calculates the sampling interval based on the return value of the callback, and triggers the onEarMonitoringAudioFrame callback based on the sampling interval.

2. Audio capture device test

This release adds support for testing local audio capture devices before joining channel. You can call startRecordingDeviceTest to start the audio capture device test. After the test is complete, call the stopPlaybackDeviceTest method to stop the audio capture device test.

3. Local network connection types

To make it easier for users to know the connection type of the local network at any stage, this release adds the getNetworkType method. You can use this method to get the type of network connection in use, including UNKNOWN, DISCONNECTED, LAN, WIFI, 2G, 3G, 4G, 5G. When the local network connection type changes, the SDK triggers the onNetworkTypeChanged callback to report the current network connection type.

4. Audio stream filter

This release introduces filtering audio streams based on volume. Once this function is enabled, the Agora server ranks all audio streams by volume and transports 3 audio streams with the highest volumes to the receivers by default. The number of audio streams to be transported can be adjusted; you can contact support@agora.io to adjust this number according to your scenarios.

Meanwhile, Agora supports publishers to choose whether or not the audio streams being published are to be filtered based on volume. Streams that are not filtered will bypass this filter mechanism and transported directly to the receivers. In scenarios where there are a number of publishers, enabling this function helps reducing the bandwidth and device system pressure for the receivers.

To enable this function, contact support@agora.io.

5. Dual-stream mode

This release optimizes the dual-stream mode, you can call enableDualStreamMode and enableDualStreamModeEx before and after joining a channel.

The implementation of subscribing low-quality video stream is expanded. The SDK enables the low-quality video stream auto mode on the sender by default (the SDK does not send low-quality video streams), you can follow these steps to enable sending low-quality video streams:

- The host at the receiving end calls

setRemoteVideoStreamTypeorsetRemoteDefaultVideoStreamTypeto initiate a low-quality video stream request. - After receiving the application, the sender automatically switches to sending low-quality video stream mode.

If you want to modify the default behavior above, you can call setDualStreamMode [1/2] or setDualStreamMode [2/2] and set the mode parameter to DISABLE_SIMULCAST_STREAM (always do not send low-quality video streams) or ENABLE_SIMULCAST_STREAM (always send low-quality video streams).

Improvements

1. Video information change callback

This release optimizes the trigger logic of onVideoSizeChanged, which can also be triggered and report the local video size change when startPreview is called separately.

Issues fixed

This release fixed the following issues.

- When calling

setVideoEncoderConfigurationExin the channel to increase the resolution of the video, it occasionally failed. - In online meeting scenarios, the local user and the remote user might not hear each other after the local user is interrupted by a call.

- After calling

setCloudProxyto set the cloud proxy, callingjoinChannelExto join multiple channels failed. - When using the Agora media player to play videos, after you play and pause the video, and then call the seek method to specify a new position for playback, the video image might remain unchanged; if you call the resume method to resume playback, the video might be played in a speed faster than the original one.

API changes

Added

getEarMonitoringAudioParamsstartRecordingDeviceTeststopRecordingDeviceTestgetNetworkTypeisAudioFilterablein theChannelMediaOptionssetDualStreamMode[1/2]setDualStreamMode[2/2]setDualStreamModeExSIMULCAST_STREAM_MODEsetZonessetPlayerAttenuationsetRemoteAudioAttenuationmuteRemoteAudioStreamSpatialAudioParamssetHeadphoneEQPresetHEADPHONE_EQUALIZER_PRESET

Modified

-

enableDualStreamMode[1/3] -

enableDualStreamMode[3/3] -

enableDualStreamModeEx

Deprecated

startEchoTest[2/3]

Deleted

enableDualStreamMode[2/3]

v4.0.0

v4.0.0 was released on September 15, 2022.

Compatibility changes

1. Integration change

This release has optimized the implementation of some features, resulting in incompatibility with v3.7.x. The following are the main features with compatibility changes:

- Multiple channel

- Media stream publishing control

- Custom video capture and rendering (Media IO)

- Warning codes

After upgrading the SDK, you need to update the code in your app according to your business scenarios. For details, see Migrate from v3.7.x to v4.0.0.

2. Callback exception handling

To facilitate troubleshooting, as of this release, the SDK no longer catches exceptions that are thrown by your own code implementation when triggering callbacks in the IRtcEngineEventHandler class. You need to catch and handle the exceptions yourself; otherwise, it can cause a crash.

New features

1. Multiple media tracks

This release supports one RtcEngine instance to collect multiple audio and video sources at the same time and publish them to the remote users by setting RtcEngineEx and ChannelMediaOptions.

After calling joinChannel to join the first channel, call joinChannelEx multiple times to join multiple channels, and publish the specified stream to different channels through different user ID (localUid) and ChannelMediaOptions settings.

Besides, this release adds createCustomVideoTrack method to implement video custom capture. You can refer to the following steps to publish multiple custom captured videos in the channel:

- Create a custom video track: Call this method to create a video track, and get the video track ID.

- Set the custom video track to be published in the channel: In each channel's

ChannelMediaOptions, set thecustomVideoTrackIdparameter to the ID of the video track you want to publish, and setpublishCustomVideoTracktotrue. - Pushing an external video source: Call

pushVideoFrame, and specifyvideoTrackIdas the ID of the custom video track in step 2 in order to publish the corresponding custom video source in multiple channels.

You can also experience the following features with the multi-channel capability:

- Publish multiple sets of audio and video streams to the remote users through different user IDs (

uid). - Mix multiple audio streams and publish to the remote users through a user ID (

uid). - Combine multiple video streams and publish them to the remote users through a user ID (

uid).

2. Full HD and Ultra HD resolution (Beta)

In order to improve the interactive video experience, the SDK optimizes the whole process of video capturing, encoding, decoding, and rendering. Starting from this version, it supports Full HD (FHD) and Ultra HD (UHD) video resolutions. You can set the dimensions parameter to 1920 × 1080 or higher when calling the setVideoEncoderConfiguration method. If your device does not support high resolutions, the SDK automatically falls back to an appropriate resolution.

The UHD resolution (4K, 60 fps) is currently in beta and requires certain device performance and network bandwidth. If you want to enable this feature, contact technical support.

High resolution typically means higher performance consumption. To avoid a decrease in experience due to insufficient device performance, Agora recommends that you enable FHD and UHD video resolutions on devices with better performance.

The increase in the default resolution affects the aggregate resolution and thus the billing rate. See Pricing.

3. Agora media player

To make it easier for users to integrate the Agora SDK and reduce the SDK's package size, this release introduces the Agora media player. After calling the createMediaPlayer method to create a media player object, you can then call the methods in the IMediaPlayer class to experience a series of functions, such as playing local and online media files, preloading a media file, changing the CDN route for playing according to your network conditions, or sharing the audio and video streams being played with remote users.

4. Ultra-high audio quality

To make the audio clearer and restore more details, this release adds the ULTRA_HIGH_QUALITY_VOICE enumeration. In scenarios that mainly feature the human voice, such as chat or singing, you can call setVoiceBeautifierPreset and use this enumeration to experience ultra-high audio quality.

5. Spatial audio

This feature is in experimental status. To enable this feature, contact support@agora.io. Contact technical support if needed.

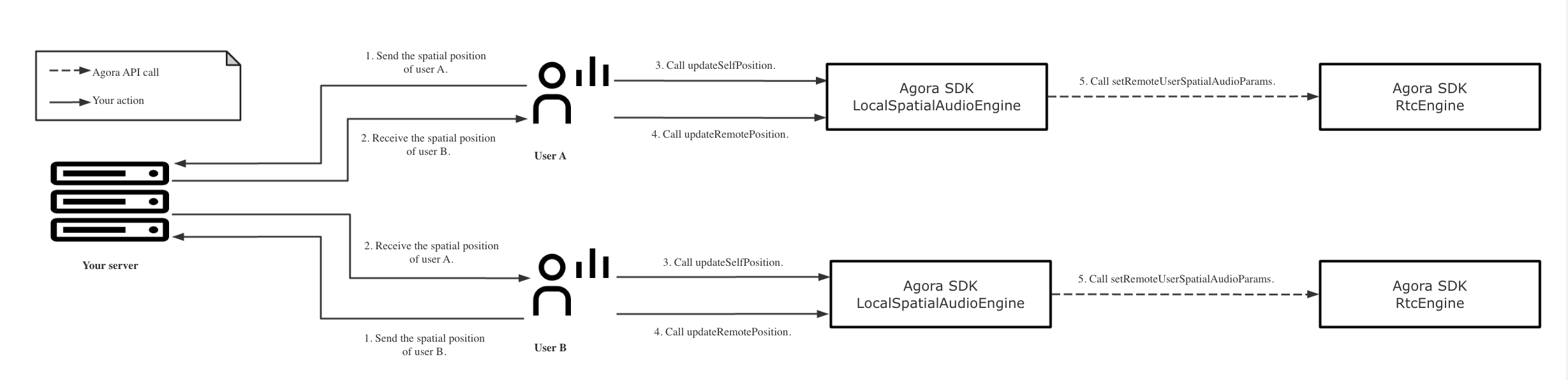

You can set the spatial audio for the remote user as following:

- Local Cartesian Coordinate System Calculation: This solution uses the

ILocalSpatialAudioEngineclass to implement spatial audio by calculating the spatial coordinates of the remote user. You need to callupdateSelfPositionandupdateRemotePositionto update the spatial coordinates of the local and remote users, respectively, so that the local user can hear the spatial audio effect of the remote user.

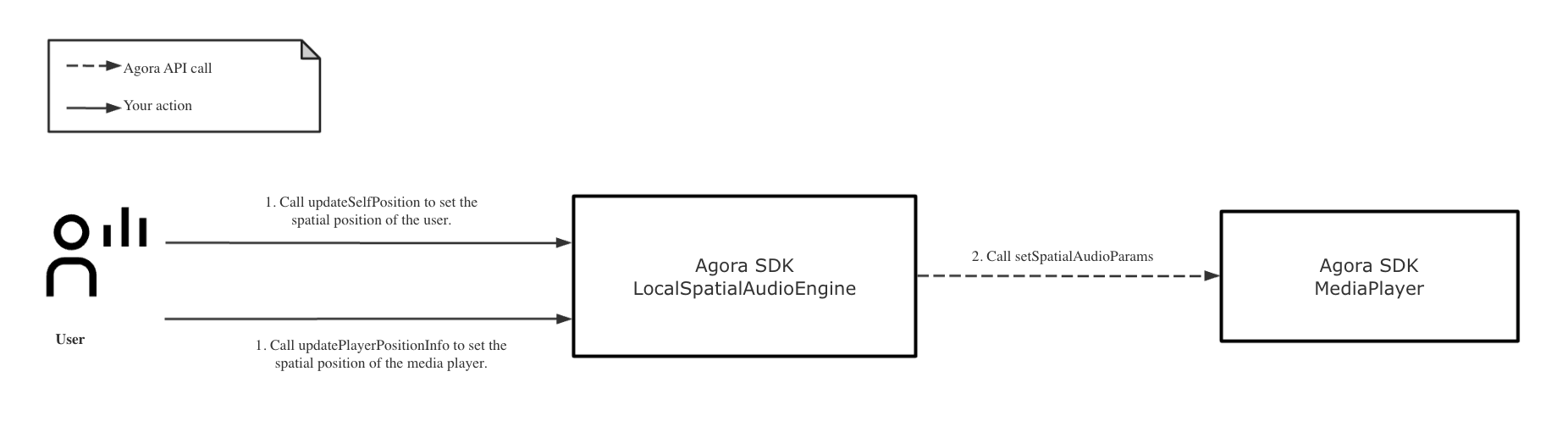

You can also set the spatial audio for the media player as following:

- Local Cartesian Coordinate System Calculation: This solution uses the

ILocalSpatialAudioEngineclass to implement spatial audio. You need to callupdateSelfPositionandupdatePlayerPositionInfoto update the spatial coordinates of the local user and media player, respectively, so that the local user can hear the spatial audio effect of media player.

6. Real-time chorus

This release gives real-time chorus the following abilities:

- Two or more choruses are supported.

- Each singer is independent of each other. If one singer fails or quits the chorus, the other singers can continue to sing.

- Very low latency experience. Each singer can hear each other in real time, and the audience can also hear each singer in real time.

This release adds the AUDIO_SCENARIO_CHORUS enumeration. With this enumeration, users can experience ultra-low latency in real-time chorus when the network conditions are good.

7. Extensions from the Agora extensions marketplace

In order to enhance the real-time audio and video interactive activities based on the Agora SDK, this release supports the one-stop solution for the extensions from the Agora extensions marketplace:

- Easy to integrate: The integration of modular functions can be achieved simply by calling an API, and the integration efficiency is improved by nearly 95%.

- Extensibility design: The modular and extensible SDK design style endows the Agora SDK with good extensibility, which enables developers to quickly build real-time interactive apps based on the Agora extensions marketplace ecosystem.

- Build an ecosystem: A community of real-time audio and video apps has developed that can accommodate a wide range of developers, offering a variety of extension combinations. After integrating the extensions, developers can build richer real-time interactive functions. For details, see Use an Extension.

- Become a vendor: Vendors can integrate their products with Agora SDK in the form of extensions, display and publish them in the Agora extensions marketplace, and build a real-time interactive ecosystem for developers together with Agora. For details on how to develop and publish extensions, see Become a Vendor.

8. Enhanced channel management

To meet the channel management requirements of various business scenarios, this release adds the following functions to the ChannelMediaOptions structure:

- Sets or switches the publishing of multiple audio and video sources.

- Sets or switches channel profile and user role.

- Sets or switches the stream type of the subscribed video.

- Controls audio publishing delay.

Set ChannelMediaOptions when calling joinChannel or joinChannelEx to specify the publishing and subscription behavior of a media stream, for example, whether to publish video streams captured by cameras or screen sharing, and whether to subscribe to the audio and video streams of remote users. After joining the channel, call updateChannelMediaOptions to update the settings in ChannelMediaOptions at any time, for example, to switch the published audio and video sources.

9. Screen sharing

This release optimizes the screen sharing function. You can enable this function in the following ways.

- Call the

startScreenCapturemethod before joining a channel, and then calljoinChannel[2/2] to join a channel and setpublishScreenCaptureVideoastrue. - Call the

startScreenCapturemethod after joining a channel, and then callupdateChannelMediaOptionsto setpublishScreenCaptureVideoastrue.

10. Subscription allowlists and blocklists

This release introduces subscription allowlists and blocklists for remote audio and video streams. You can add a user ID that you want to subscribe to in your allowlist, or add a user ID for the streams you do not wish to see to your blocklists. You can experience this feature through the following APIs, and in scenarios that involve multiple channels, you can call the following methods in the RtcEngineEx interface:

setSubscribeAudioBlacklist:Set the audio subscription blocklist.setSubscribeAudioWhitelist:Set the audio subscription allowlist.setSubscribeVideoBlacklist:Set the video subscription blocklist.setSubscribeVideoWhitelist:Set the video subscription allowlist.

If a user is added in a blocklist and a allowlist at the same time, only the blocklist takes effect.

11. Set audio scenarios

To make it easier to change audio scenarios, this release adds the setAudioScenario method. For example, if you want to change the audio scenario from AUDIO_SCENARIO_DEFAULT to AUDIO_SCENARIO_GAME_STREAMING when you are in a channel, you can call this method.

Improvements

1. Fast channel switching

This release can achieve the same switching speed as switchChannel in v3.7.x through the leaveChannel and joinChannel methods so that you don't need to take the time to call the switchChannel method.

2. Push external video frames

This releases supports pushing video frames in I422 format. You can call the pushExternalVideoFrame [1/2] method to push such video frames to the SDK.

3. Voice pitch of the local user

This release adds voicePitch in AudioVolumeInfo of onAudioVolumeIndication. You can use voicePitch to get the local user's voice pitch and perform business functions such as rating for singing.

4. Device permission management

This release adds the onPermissionError method, which is automatically reported when the audio capture device or camera does not obtain the appropriate permission. You can enable the corresponding device permission according to the prompt of the callback.

5. Video preview

This release improves the implementation logic of startPreview. You can call the startPreview method to enable video preview at any time.

6. Video types of subscription

You can call the setRemoteDefaultVideoStreamType method to choose the video stream type when subscribing to streams.

Notifications

2022.10

- After you enable Notifications, your server receives the events that you subscribe to in the form of HTTPS requests.

- To improve communication security between the Notifications and your server, Agora SD-RTN™ uses signatures for identity verification.

- As of this release, you can use Notifications in conjunction with this product.

AI Noise Suppression

Agora charges additionally for this extension. See Pricing.

v1.1.0

Improvement

This release improves the calculation performance of the AI-powered noise suppression algorithm.

New features

This release adds the following APIs and parameters:

- APIs:

checkCompatibility: Checks whether the AI Noise Suppression extension is supported on the current browser.setMode: Sets the noise suppression mode as AI noise suppression or stationary noise suppression.setLevel: Sets the AI noise suppression level.

- Parameters:

elapsedTimeinonoverload: Reports the time in ms that the extension needs to process one audio frame.

For API details, see AI Noise Suppression.

Compatibility changes

This release brings the following changes:

- AI Noise Suppression supports Agora Video SDK for Web v4.15.0 or later.

- The extension has Wasm dependencies only. Because JS dependencies are removed, you need to publish the Wasm files located in the

node_modules/agora-extension-ai-denoiser/externaldirectory again. If you have enabled the Content Security Policy (CSP), you need to modify the CSP configuration. See AI Noise Suppression for details. - The audio data is dumped in PCM format instead of WAV format.

- To adjust the intensity of noise suppression, best practice is to call

setLevel.

v1.0.0

First release.

Virtual Background

You may be charged for the usage of this extension. Contact support@agora.io for details.

v1.1.3

Fixed issues

This release fixes the occasional issue of jagged background images on Chrome for Android.

v1.1.2

New features

You can now specify the fit property when calling setOptions. This sets how the background is resized to fit the container. For API details, see Virtual background.

Compatibility changes

Virtual Background supports Agora Video SDK for Web v4.15.0 or later.

v1.1.1

New features

You can now call checkCompatibility and test if AI Noise Suppression extension is supported on the current browser. For API details, see Virtual background.

Fixed issues

A black bar is no longer displayed to the left of the virtual background.

v1.1.0

New features

You can create multiple VirtualBackgroundProcessor instances to process multiple video streams.

v1.0.0

First release.